You have no items in your shopping cart.

Serverless Computing in Cloud Infrastructure

In the age of cloud computing, traditional server management requires constant oversight, leading to inefficiencies and elevated costs. Serverless computing provides an elegant solution by eliminating the need for such management, streamlining operations, and reducing expenses.

Back in 2017, the serverless market was estimated at around USD 3B. However, it is currently projected to reach USD 20B by 2025.

With numerous advantages over traditional cloud computing and server-centric infrastructure, serverless architecture provides more flexibility, scalability, simplified deployment, and reduced cost.

This exponential growth underscores its transformative potential. Businesses seek faster and cheaper solutions, and serverless computing offers many benefits and opportunities.

Let's dive into the future of serverless cloud infrastructure, where innovation knows no bounds.

Serverless Computing: An Evaluation of Cloud Computing

Serverless computing is a groundbreaking concept in cloud infrastructure. It represents a paradigm shift in how applications are developed, deployed, and managed. Unlike traditional server-centric approaches, it simplifies routine infrastructure management. This feature allows developers to focus solely on writing code and delivering value to users.

At its core, serverless computing operates on a pay-per-use model. Users are charged based on the actual computing resources consumed by their applications rather than for provisioned servers or virtual machines.

This not only reduces operational overhead but also enables more efficient resource utilization.

Key Characteristics of Serverless Computing:

● Pay-per-use pricing model: Charges are only incurred for actual resource usage, directly translating to significant cost savings and operational efficiency.

● Event-driven architecture: Functions are triggered by events such as HTTP requests, database changes, or file uploads, enabling responsive and scalable applications.

● Automatic scaling: A hallmark of scalable cloud solutions, where serverless technology ensures dynamic scaling to handle demand fluctuations seamlessly.

● No server management: Developers are freed from infrastructure management tasks, allowing them to deploy code and deliver value.

● Rapid development and deployment: The serverless framework supports rapid provisioning, fostering agile development cycles that enable faster time-to-market and innovation.

As organizations seek to modernize their applications and accelerate digital transformation, serverless computing emerges as a powerful tool for driving innovation and agility in the cloud-native era.

Core Components of Serverless Architecture

These core technology components form the foundation of serverless computing, enabling developers to build scalable, cost-effective, and resilient applications without the complexities of traditional server-centric architectures. As organizations increasingly embrace digital transformation, serverless computing emerges as a key enabler, driving innovation and agility in the cloud-native era.

API Gateways

The API Gateways serve as the communication layer that helps execute application logic between the front end and the FaaS layer. It is crucial to map REST API endpoints to the corresponding functions that execute the business logic.

The API Gateway streamlines communication and enhances scalability and flexibility by eliminating the need for traditional servers and load balancers.

Functions or Function as a Service (FaaS)

The Functions or Function as a Service (FaaS) layer is at the heart of serverless computing. This component manages multiple function instances that execute specific business logic or code in response to incoming events or requests.

The cloud provider handles the underlying infrastructure, allowing developers to focus solely on writing and deploying code. This level of abstraction simplifies development and enhances agility, as developers no longer need to concern themselves with server provisioning or management.

Backend as a Service (BaaS)

Backend as a Service (BaaS) complements the serverless architecture by providing a cloud-based distributed NoSQL database.

This component removes the burden of database administration overheads, allowing developers to focus on building and deploying applications without worrying about managing databases. By leveraging BaaS, organizations can streamline development processes, reduce time to market, and scale their applications more efficiently.

Serverless vs. Traditional Cloud Models

Understanding the differences between cloud models like PaaS, IaaS, and CaaS and emerging paradigms like Serverless Computing is essential for businesses seeking the right fit for their needs.

Each model offers unique benefits and considerations regarding deployment, resource management, scalability, cost-effectiveness, developer flexibility, and maintenance overhead.

Let's see how organizations can make informed decisions with these models:

Platform as a Service (PaaS) vs. Serverless Computing

While fundamentally a Platform as a Service (PaaS) solution, serverless computing presents nuanced differences that warrant exploration. While some cloud providers treat them interchangeably, distinctions exist, with true serverless computing often labeled Function as a Service (FaaS).

Distinguishing Factors:

- Autoscaling Configuration: PaaS solutions typically require manual configuration for autoscaling based on demand, whereas serverless solutions inherently scale dynamically.

- Deployment Control: Serverless solutions offer less control over deployment environments than PaaS counterparts.

- Launch Speed: PaaS applications generally entail longer launch times, while serverless code executes only upon invocation, minimizing latency.

Pros:

● Cost Efficiency: Serverless models operate on a pay-per-execution basis, optimizing cost-effectiveness.

● Scalability: Automatic scaling in response to demand fluctuations ensures optimal performance.

● Simplified Deployment: Developers can focus on code development without worrying about operating systems or other infrastructure management.

● Rapid Development: The modular nature of smaller, focused functions facilitates accelerated development cycles.

Cons:

● Cold Starts: Initialization processes can introduce latency in function execution, although some setups mitigate this issue.

● Limited Execution Time: Constraints may exist on the duration of function execution.

● Vendor Lock-In: Migrating between serverless providers can pose challenges due to platform-specific dependencies.

● Debugging Complexity: Limited visibility into underlying infrastructure complicates debugging processes.

Examples:

Leading cloud providers offer a range of serverless services and PaaS solutions. In AWS, Elastic Beanstalk and Lambdas represent PaaS and serverless computing, respectively.

GCP provides Cloud Run for PaaS and Cloud Functions for serverless computing, while Azure offers App Services and Function Apps for similar functionalities.

Practical Use Cases of Serverless Applications:

Azure Function App exemplifies event-driven solutions triggered by diverse events such as schedules, manual actions, or specific triggers like HTTP calls or API uploads.

● Automatic VM provisioning based on malfunction reports.

● Incrementing counters upon user uploads to storage.

● Periodic API calls to gather weather data for database updates.

● Automated invoice generation upon website orders.

● Data capture and transformation from event and IoT streams.

● Queue-based text processing for AI analysis and classification.

Serverless Computing vs. Other Cloud Models

|

Aspect |

Serverless Computing |

Infrastructure as a Service (IaaS) |

Containers |

Container as a Service (CaaS) |

|

Resource Management |

Automatically managed by the platform. |

Users manage virtualized resources, including servers, storage, and networking. |

Containerized applications run within isolated environments. |

The platform manages container orchestration, scaling, and infrastructure. |

|

Deployment Granularity |

Granular, with functions deployed independently. |

Application-level deployment. |

Application packaged with dependencies into containers. |

Containerized application deployment is often orchestrated at the service level. |

|

Scaling |

Automatic scaling based on demand without manual intervention. |

Manual or automatic scaling of virtual machines or resources. |

Manual or automatic scaling of container instances. |

Automatic scaling of container instances managed by the platform. |

|

Management Overhead |

Minimal management overhead as the platform handles infrastructure. |

Moderate management overhead, requiring setup and maintenance of virtualized resources. |

Moderate management overhead for container orchestration and management. |

Reduced management overhead as the platform handles container orchestration and infrastructure. |

|

Cost Structure |

The pay-per-execution model typically based on usage |

Pay-per-use or subscription model for virtualized resources |

Pay-per-use model for container instances and associated resources |

Pay-per-use or subscription model for container orchestration and management |

|

Development Flexibility |

Encourages small, focused functions conducive to microservices architecture. |

Offers flexibility in application architecture but requires more manual configuration. |

Supports containerized applications, providing portability across environments. |

Promotes microservices architecture with container-based deployment. |

|

Resource Utilization |

Optimizes resource utilization by scaling resources dynamically. |

Resource utilization dependent on manual or automatic scaling configurations. |

Efficient resource utilization due to container isolation and portability. |

Efficient resource utilization with dynamic scaling and orchestration |

Power of Serverless Computing: Practical Use Cases and Applications

Using serverless computing is driving significant transformations across industries. It enables companies to focus more on delivering value to their customers while reducing infrastructure management overhead and accelerating time-to-market.

Let's see some helpful use cases and practices of serverless computing:

1. Integrating with Third-Party Services and APIs

The serverless approach offers an efficient solution for integrating third-party services and APIs. By leveraging serverless functions, developers can easily create lightweight serverless workflows to connect with various external services and APIs.

This approach eliminates the need to manage and scale infrastructure, allowing seamless integration with minimal operational overhead.

2. Scheduled Tasks & Automation

Serverless platforms provide built-in capabilities for running scheduled tasks such as generating daily reports, performing backups, or executing recurring business logic.

Developers can define scheduled events to trigger serverless functions at specific times or intervals, automating routine tasks without the need to maintain dedicated servers or cron jobs.

3. Optimizing Workflow Automation with Serverless Solutions

Serverless computing enables efficient IT process automation by allowing developers to implement workflows for tasks like access removal, security checks, and approvals.

By leveraging serverless functions, organizations can automate repetitive IT processes, streamline operations, and ensure compliance with security policies while benefiting from the scalability and cost-effectiveness of serverless architectures without a dedicated server.

4. Harnessing Serverless Computing for Efficient Real-Time Data Processing

Serverless platforms are well-suited for real-time data processing tasks, handling both structured and unstructured data streams with ease.

Organizations can process incoming data in real-time and perform analytics by utilizing a robust analytics service with serverless functions as event handlers, eliminating the need to manage the underlying infrastructure.

5. CI/CD Pipelines

Serverless architectures facilitate the automation of CI/CD pipelines and enhance system monitoring capabilities by allowing developers to implement automated build, test, and deployment workflows using serverless functions.

By integrating serverless functions into CI/CD pipelines, organizations can achieve faster release cycles, improve development productivity, and reduce the operational overhead of managing traditional CI/CD infrastructure.

6. REST API Backends

Building a serverless application offers an efficient approach for creating REST API backends in serverless environments, enabling developers to quickly deploy scalable and cost-effective API endpoints for web apps without managing servers or infrastructure.

Organizations can achieve high availability, scalability, and flexibility for their API-based applications by implementing serverless functions as API handlers while minimizing operational complexity and infrastructure costs.

7. Trigger-Based Actions or Running Scheduled Tasks (e.g., Daily Reports, Backups, Business Logic)

Serverless platforms provide robust support for trigger-based actions and scheduled tasks, allowing developers to define event-driven workflows for various use cases.

Whether it's triggering serverless functions in response to specific events or scheduling tasks to run at predetermined times, serverless computing simplifies the implementation of automated workflows, enabling organizations to streamline their operations and improve efficiency.

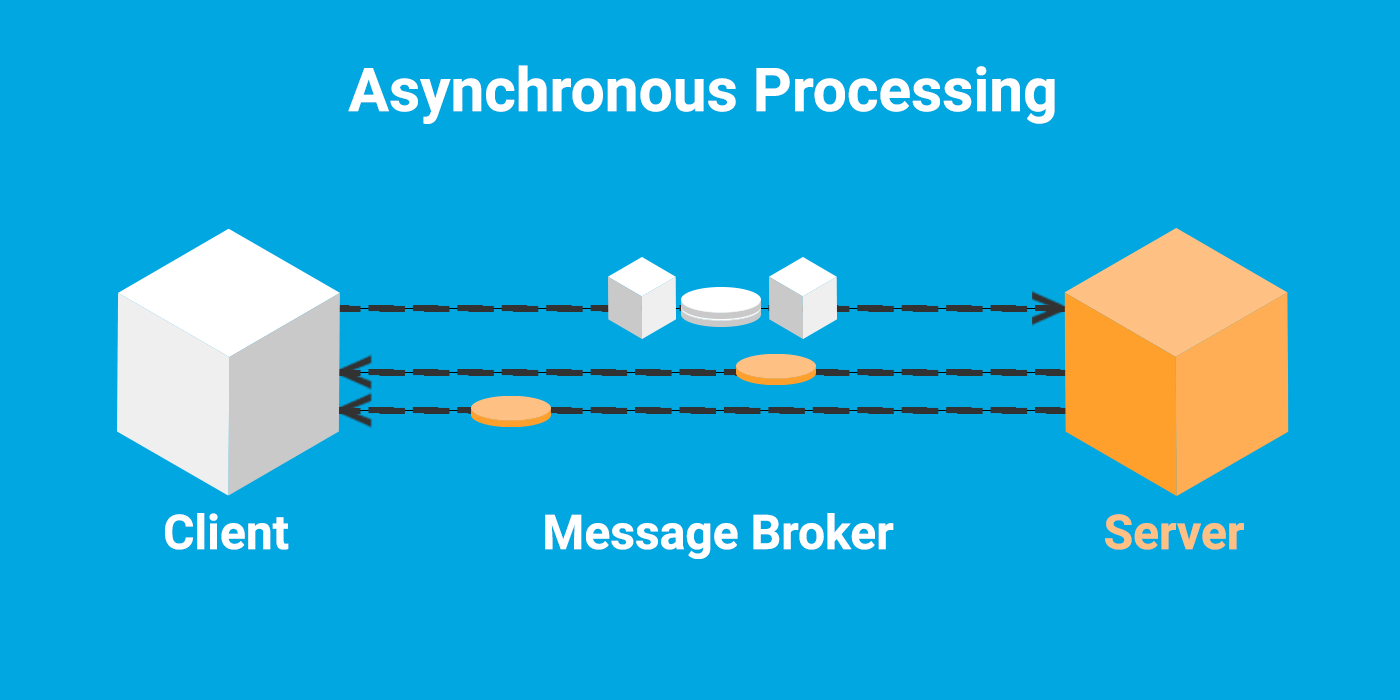

8. Asynchronous Processing

Serverless architectures excel at handling asynchronous processing tasks, such as batch processing, file processing, or background jobs.

With serverless functions, organizations can offload resource-intensive or time-consuming tasks to scalable and event-driven compute environments, ensuring efficient utilization of resources and timely execution of asynchronous processing tasks.

9. Real-Time or Scheduled Notifications

Serverless and edge computing provides a reliable mechanism for delivering real-time or scheduled notifications to users or systems. Organizations can implement event-driven notification workflows for various scenarios by utilizing serverless functions as notification handlers, including alerts, reminders, updates, or notifications based on specific triggers or schedules.

This approach enables timely and personalized communication with stakeholders while benefiting from the scalability and cost-effectiveness of serverless architectures.

Leading Cloud Providers in Serverless Computing

Here’s a list of leading serverless providers:

|

Serverless Provider |

Description |

Key Features |

|

AWS Lambda |

Event-driven, serverless computing platform within Amazon Web Services. |

A comprehensive range of services is available, and it supports Java, Python, Node.js, and other languages. |

|

Azure Functions by Microsoft |

The serverless computing service is hosted on the Microsoft Azure public cloud. |

Enables running application code on-demand in multiple languages, including Node.js, C#, Python, and more. |

|

Google Cloud Functions (GCF) |

Serverless, event-driven computing service within Google Cloud Platform. |

Allows the creation of small, single-purpose functions and integrates with various cloud services. |

|

IBM Cloud Functions |

Serverless programming platform based on Apache OpenWhisk. |

Supports multiple programming languages such as JavaScript and Python, and accelerates application development. |

|

Apache OpenWhisk |

Open-source distributed serverless cloud platform developed by the Apache Software Foundation. |

Executes functions in response to events from various sources and offers per-request scaling and automatic resource allocation. |

|

Spotinst |

Offers multi-cloud infrastructure management solutions, automating workload deployment and migration. |

Enables enterprises to run any workload and supports large-scale migrations on any cloud provider. |

|

Cloudflare Workers |

A suite of products facilitating serverless site development and extension without infrastructure management. |

A lightweight JavaScript serverless execution environment enhances website performance, security, and reliability. |

|

Oracle Fn Project |

Open-source serverless compute platform supporting all programming languages, runnable anywhere. |

Performant, easy-to-use solution for serverless computing across public, private, and hybrid clouds. |

|

Kubernetes |

An open-source system for container orchestration across multiple hosts was initially developed by Google. |

Ideal for deploying various workloads, providing scalability, reliability, and portability. |

The Future of Serverless Computing

So, the serverless computing era offers various possibilities for businesses seeking to optimize their operations and accelerate innovation in the cloud.

With a diverse array of providers and services available, the decision to transition to serverless architecture warrants careful consideration and thorough research.

Assess your business needs, explore the potential benefits of serverless features, and evaluate which providers align best with your requirements. By delving into the realm of serverless computing with due diligence, you can unlock the full potential of cloud infrastructure for your organization's success.